Tech Project

Description of the challenges faced by the Tech Project

The team is developing a web-based ecosystem of software and hardware to create embodied collective interaction with media, and particularly with sound and music. In this context, the challenge we propose is to invent, design and develop novel scenarios, interactive paradigms, gameplay – by means of any artistic form with a strong emphasis on sonic aspects – that implement, support and/or question human / human interaction through the mediation of these technologies.

Brief description of technology

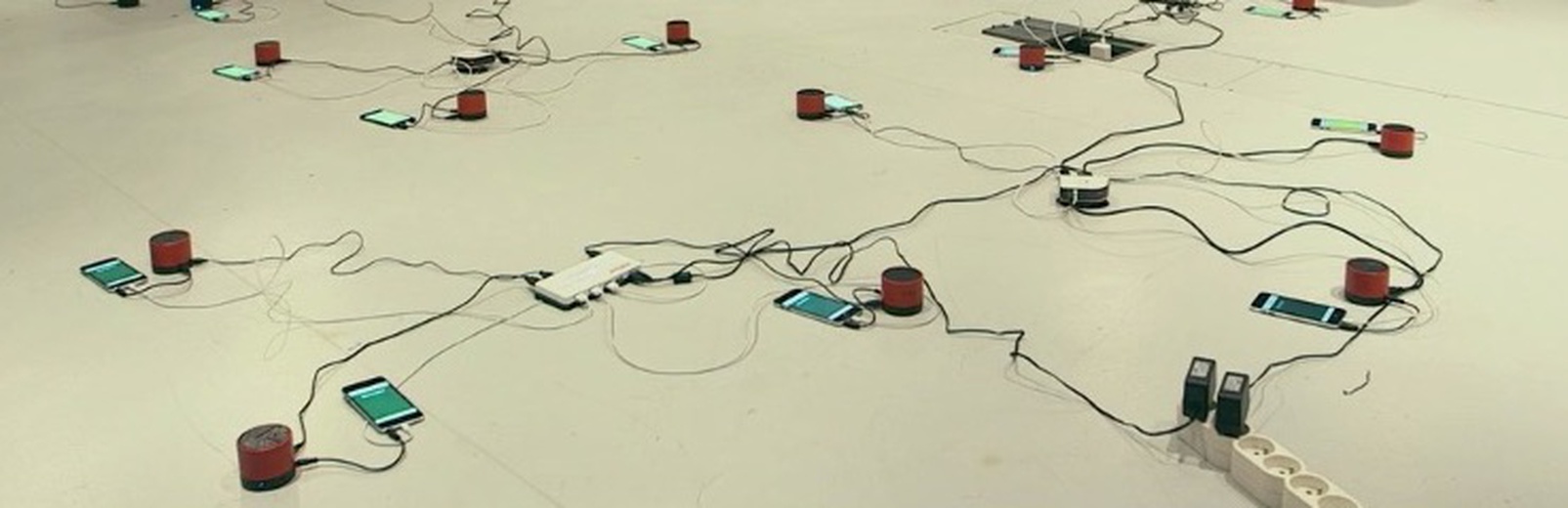

Our framework is composed of two complementary aspects: a technical part dedicated at designing and implementing collective and interactive scenarios; and a theoretical part based on the concept of interaction topologies, aimed at describing and analysing such systems. This framework particularly focuses on scenarios of collective musical interaction within a distributed environment composed of numerous devices, such as smartphones and IoT devices. The platform is strongly based on web technologies and javascript, and is particularly focused on the synchronization, real-time communications and multimedia rendering across multiple components of a common network.

What the project is looking to gain from the collaboration and what kind of artist would be suitable

We have developed several technological tools, software, prototypes and possible use cases, for the use of mobile and ubiquitous technologies in various artistic context. Such possible uses concern for example audience participation in concerts/installation or use of gesture and movement in performance. We are interested in expanding our current platform in co-creating novel uses and approaches in close collaboration with artists. We are also aiming at creating a stimulating art-science-technology dialog in developing technology, uses and critical thinking on such tools and methods. We are looking for composers/sound designers/musicians/artists who wish to collaborate, co-create novel collective musical experience, document and think critically about the whole process.

Resources available to the artist

The artist will closely collaborate with the Sound Music Movement Interaction team and will have access to IRCAM facilities. In particular, we will provide the artist a set of libraries and applications specifically designed for rapid prototyping of musical collective interaction (https://github.com/collective-soundworks), sound analysis, synthesis and visualization (https://github.com/wavesjs), gesture and movement recognition using interactive machine learning (http://como.ircam.fr), and when possible, a set of motion sensors, microcomputers and/or mobiles devices (considering concurrent constraints imposed by other simultaneous projects).